반응형

💡 본 문서는 'Autonomous Driving Open Dataset: KITTI Dataset (Visual Odometry, 3D Object Detection)'에 대해 정리해놓은 글입니다.

자율주행 차량의 센서 데이터셋 중 하나인 KITTI 360 Dataset에 대해 정리하였으니 참고하시기 바랍니다.

KITTI dataset 소개

데이터셋 소개(KITTI dataset)

- Datasets collected with a car driving around rural areas of a city.

- Lidar와 여러 대의 Camera로 수집한 데이터와 이에 대한 일부의 라벨로 데이터셋은 구성

- 제작 업체 : KIT (Karlsruhe Institute oof Technology)

- 데이터셋 공식 링크 : https://www.cvlibs.net/datasets/kitti/

데이터셋 소개(KITTI Sensor)

사용 센서 모델명

- Inertial Navigation System (GPS/IMU): OXTS RT 3003 (250Hz의 빠른 주기, cm단위의 오차가 발생하기에 높은 성능)

- Laserscanner: Velodyne HDL-64E (10만개 points/1sec 측정 가능)

- Camera (최대 셔터 스피드 : 2ms Lidar의 트리거에 맞추어 촬영)

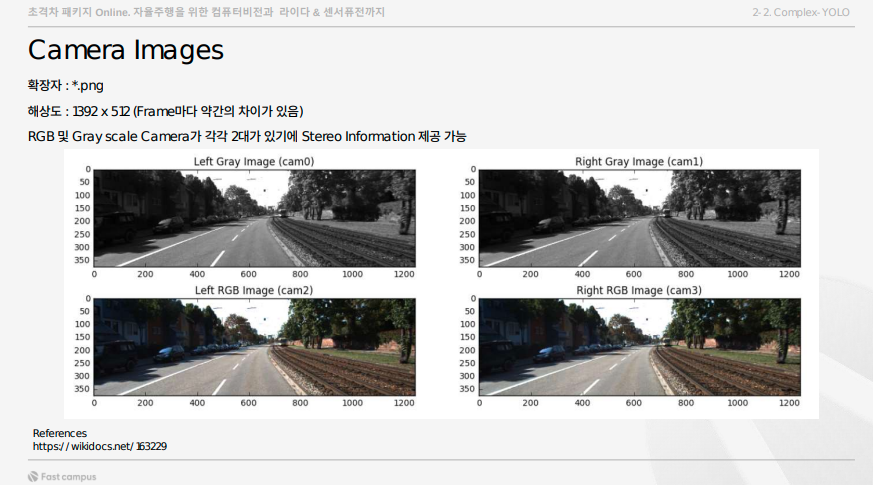

- Grayscale cameras, 1.4 Megapixels: Point Grey Flea 2 (FL2-14S3M-C)

- Color cameras, 1.4 Megapixels: Point Grey Flea 2 (FL2-14S3C-C)

- Varifocal lenses, 4-8 mm: Edmund Optics NT59-917

데이터셋 구성

- Raw (unsynced+unrectified) and processed (synced+rectified) grayscale stereo sequences (0.5 Megapixels, stored in png format)

- Raw (unsynced+unrectified) and processed (synced+rectified) color stereo sequences (0.5 Megapixels, stored in png format)

- 3D Velodyne point clouds (100k points per frame, stored as binary float matrix)

- 3D GPS/IMU data (location, speed, acceleration, meta information, stored as text file)

- Calibration (Camera, Camera-to-GPS/IMU, Camera-to-Velodyne, stored as text file)

- 3D object tracklet labels (cars, trucks, trams, pedestrians, cyclists, stored as xml file)

KITTI dataset 종류

Visual Odometry / SLAM Evaluation 2012

22개의 스테레오 시퀀스, 총합 39.2km 길이.

GT: GPS/IMU localization unit을 projection 시켜서 얻음

데이터셋 구성

├── dataset

│ ├── poses

│ └── sequences

│ ├── 00

│ │ ├── image_0

│ │ ├── image_1

│ │ ├── image_2

│ │ ├── image_3

│ │ └── velodyne

│ ├── 01

│ │ ├── image_0

│ │ ├── image_1

│ │ ├── image_2

│ │ ├── image_3

│ │ └── velodyne

│ ...

│ └── 21

│ ├── image_0

│ ├── image_1

│ ├── image_2

│ ├── image_3

│ └── velodyne

├── devkit

│ └── cpp데이터셋 다운로드

Visual Odometry / SLAM Evaluation 2012 https://www.cvlibs.net/datasets/kitti/eval_odometry.php

#!/bin/bash

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_odometry_gray.zip

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_odometry_color.zip

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_odometry_velodyne.zip

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_odometry_calib.zip

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/data_odometry_poses.zip

wget https://s3.eu-central-1.amazonaws.com/avg-kitti/devkit_odometry.zip

unzip '*.zip'- Download odometry data set (grayscale, 22 GB)

- Download odometry data set (color, 65 GB)

- Download odometry data set (velodyne laser data, 80 GB)

- Download odometry data set (calibration files, 1 MB)

- Download odometry ground truth poses (4 MB)

- Download odometry development kit (1 MB)

- Lee Clement and his group (University of Toronto) have written some python tools for loading and parsing the KITTI raw and odometry datasets

추가 자료

- [Blog] Exploring KITTI Dataset: Visual Odometry for autonomous vehicles: https://medium.com/@jaimin-k/exploring-kitti-visual-ododmetry-dataset-8ac588246cdc

3d object detection

cars, vans, trucks, pedestrians, cyclists and trams 같은 오브젝트에 대해서 3D bbox를 제공하고 있음.

Velodyne system에 의해서 3D point clouds를 만든다음에 이미지로 projection하는 식으로 레이블링 했기 때문에 정확.

데이터셋 구성

kitti

├── ImageSets

│ ├── train.txt

│ └── val.txt

├── training/

│ ├── 00/

│ │ ├── image_0

│ │ ├── image_1

│ │ ├── image_2

│ │ ├── image_3

│ │ ├── calib/

│ │ └── velodyne/

│ ...

│ └── 21

│ ├── image_0

│ ├── image_1

│ ├── image_2

│ ├── image_3

│ └── velodyne

└── testing/

├── 00/

│ ├── image_0

│ ├── image_1

│ ├── image_2

│ ├── image_3

│ ├── calib/

│ └── velodyne/

...

└── 21

├── image_0

├── image_1

├── image_2

├── image_3

└── velodyne데이터셋 다운로드

3D Object Detection Evaluation 2017: https://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=3d

BASE_DIR="$1"/kitti

mkdir -p $BASE_DIR

url_velodyne="https://s3.eu-central-1.amazonaws.com/avg-kitti/data_object_velodyne.zip"

url_calib="https://s3.eu-central-1.amazonaws.com/avg-kitti/data_object_calib.zip"

url_label="https://s3.eu-central-1.amazonaws.com/avg-kitti/data_object_label_2.zip"

wget -c -N -O $BASE_DIR'/data_object_velodyne.zip' $url_velodyne

wget -c -N -O $BASE_DIR'/data_object_calib.zip' $url_calib

wget -c -N -O $BASE_DIR'/data_object_label_2.zip' $url_label- Download left color images of object data set (12 GB)

- Download right color images, if you want to use stereo information (12 GB)

- Download the 3 temporally preceding frames (left color) (36 GB)

- Download the 3 temporally preceding frames (right color) (36 GB)

- Download Velodyne point clouds, if you want to use laser information (29 GB)

- Download camera calibration matrices of object data set (16 MB)

- Download training labels of object data set (5 MB)

- Download object development kit (1 MB) (including 3D object detection and bird's eye view evaluation code)

- Download pre-trained LSVM baseline models (5 MB) used in Joint 3D Estimation of Objects and Scene Layout (NIPS 2011). These models are referred to as LSVM-MDPM-sv (supervised version) and LSVM-MDPM-us (unsupervised version) in the tables below.

- Download reference detections (L-SVM) for training and test set (800 MB)

- Qianli Liao (NYU) has put together code to convert from KITTI to PASCAL VOC file format (documentation included, requires Emacs).

- Karl Rosaen (U.Mich) has released code to convert between KITTI, KITTI tracking, Pascal VOC, Udacity, CrowdAI and AUTTI formats.

- Jonas Heylen (TRACE vzw) has released pixel accurate instance segmentations for all 7481 training images.

- We thank David Stutz and Bo Li for developing the 3D object detection benchmark.

- Koray Koca (TUM) has released conversion scripts to export LIDAR data to Tensorflow records.

Calibration

Calibration Camera to Camera

카메라 Calibration 관련 Metrix는 해당 txt 파일(calib_cam_to_cam.txt)을 참고하시기 바랍니다.

The file calib_cam_to_cam.txt contains parameters for 3 cameras.

- S_0x: is the image size. You do not really need it for anything.

- K_0x: is the intrinsics matrix. You can use it to create a cameraParameters object in MATLAB, but you have to transpose it, and add 1 to the camera center, because of MATLAB's 1-based indexing.

- D_0x: are the distortion coefficients in the form [k1, k2, p1, p2, k3]. k1, k2, and k3 are the radial coefficients, and p1 and p2 are the tangential distortion coefficients.

- R_0x and T_0x are the camera extrinsics. They seem to be a transformation from a common world coordinate system into each of the cameras' coordinate system.

- S_rect_0x, R_rect_0x, and P_rect_0x are the parameters of the rectified images.

Given all that, here's what you should do.

- Pick two cameras of the three, that you want to use.

- Create a cameraParameters object for each camera using the intrinsics and the distortion parameters (K_0x and D_0x). Don't forget to transpose K and adjust for 1-based indexing.

- From the extrinsics of the two cameras (R_0x's and T_0x's) compute the rotation and translation between the two cameras. (R and t).

- Use the two cameraParameters objects together with R and t to create a stereoParameters object.

Calibration Camera to Camera (Code)

# KITTI calib_cam_to_cam.txt

# S_02: 1.392000e+03 5.120000e+02

# K_02: 9.597910e+02 0.000000e+00 6.960217e+02 0.000000e+00 9.569251e+02 2.241806e+02 0.000000e+00 0.000000e+00 1.000000e+00

# D_02: -3.691481e-01 1.968681e-01 1.353473e-03 5.677587e-04 -6.770705e-02

# R_02: 9.999758e-01 -5.267463e-03 -4.552439e-03 5.251945e-03 9.999804e-01 -3.413835e-03 4.570332e-03 3.389843e-03 9.999838e-01

# T_02: 5.956621e-02 2.900141e-04 2.577209e-03

calibration_matrix =[[9.597910e+02, 0.000000e+00, 6.960217e+02],

[0.000000e+00, 9.569251e+02, 2.241806e+02],

[0.000000e+00, 0.000000e+00, 1.000000e+00]]

R = [[9.999758e-01, -5.267463e-03, -4.552439e-03],

[5.251945e-03, 9.999804e-01, -3.413835e-03],

[4.570332e-03, 3.389843e-03, 9.999838e-01]]

T = [5.956621e-02, 2.900141e-04, 2.577209e-03 ]

R_T = [[9.999758e-01, -5.267463e-03, -4.552439e-03, 5.956621e-02,],

[5.251945e-03, 9.999804e-01, -3.413835e-03, 2.900141e-04],

[4.570332e-03, 3.389843e-03, 9.999838e-01, 2.577209e-03]]

fx = calibration_matrix[0][0]

ox = calibration_matrix[0][2]

fy = calibration_matrix[1][1]

oy = calibration_matrix[1][2]참고

- [Blog] SLAM용 KITTI 데이터셋 (KITTI Odometry) 빠르게 다운로드 받는 방법: https://www.cv-learn.com/20240304-fast-kitti-download/

- [Blog] 3D Object Detection with Open3D-ML and PyTorch Backend: https://medium.com/@kidargueta/3d-object-detection-with-open3d-ml-and-pytorch-backend-b0870c6f8a85

반응형

![[Dataset] Autonomous Driving Open Dataset: KITTI Dataset (Visual Odometry/SLAM, 3D Object Detection)](https://img1.daumcdn.net/thumb/R750x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FEa3R9%2FbtsCKsdwR9n%2FvIRzJMScOnD8uibIUSheL0%2Fimg.png)